Posted by Jesse JCharis

Feb. 23, 2025, 5:49 a.m.

The Critical Importance of Logging LLM Prompts and Responses

Why Logging LLM Interactions Matters

As Large Language Models (LLMs) become integral to business applications, systematic logging of prompts and responses emerges as a critical requirement. Here's why:

Transparency & Auditability

- Trace decision-making processes

- Meet GDPR/CPRA compliance requirements

- Investigate inappropriate outputs

Model Improvement

- Identify edge cases through real-world usage patterns

- Detect prompt injection attacks

- Analyze common failure modes

Performance Monitoring

- Track latency/quality metrics

- Calculate API costs

- Monitor output consistency

Legal Protection

- Maintain records of AI-generated content

- Demonstrate due diligence

- Support copyright disputes

Monitoring Tools Landscape

1. Specialized LLM Observability Platforms

- Arize AI: Tracks model drift and data quality

- WhyLabs: Monitors statistical distributions of prompts/responses

- LangSmith: Specialized for LangChain workflows

2. General-Purpose MLOps Tools

# MLflow Tracking Example

import mlflow

with mlflow.start_run():

mlflow.log_param("prompt", user_input)

mlflow.log_metric("response_length", len(output))

mlflow.log_text(output, "response.txt")

# MLflow Tracking Example

import mlflow

with mlflow.start_run():

mlflow.log_param("prompt", user_input)

mlflow.log_metric("response_length", len(output))

mlflow.log_text(output, "response.txt")

3. Custom Solutions (Recommended for Sensitive Data)

# Elasticsearch + Kibana Stack

from elasticsearch import Elasticsearch

es = Elasticsearch()

doc = {

"prompt": sanitized_input,

"response": output,

"timestamp": datetime.now().isoformat(),

"model_version": "gpt-4-0613"

}

es.index(index="llm_logs", document=doc)

# Elasticsearch + Kibana Stack

from elasticsearch import Elasticsearch

es = Elasticsearch()

doc = {

"prompt": sanitized_input,

"response": output,

"timestamp": datetime.now().isoformat(),

"model_version": "gpt-4-0613"

}

es.index(index="llm_logs", document=doc)

Robust Python Implementation

Feature Requirements

- Secure storage with access controls

- Metadata capture (timestamps, model versions)

- PII redaction capabilities

- Asynchronous logging to prevent latency spikes

- Rotation/archiving strategies

Complete Logging Class

import json

import datetime

from functools import wraps

import hashlib

import os

from typing import Optional, Dict, Any

class LLMLogger:

def __init__(

self,

log_dir: str = "llm_logs",

max_file_size: int = 1000000, # 1MB per file

redact_keys: list = ["ssn", "credit_card"]

):

os.makedirs(log_dir, exist_ok=True)

self.log_dir = log_dir

self.max_file_size = max_file_size

self.redact_keys = redact_keys

# Initialize current log file

self.current_file = self._get_current_filename()

def _redact_data(self, data: str) -> str:

"""Basic PII redaction"""

for key in self.redact_keys:

data = data.replace(key, "[REDACTED]")

return data

def _get_current_filename(self) -> str:

return os.path.join(

self.log_dir,

f"llm_log_{datetime.datetime.now().strftime('%Y%m%d_%H%M')}.ndjson"

)

def _rotate_file(self):

if os.path.getsize(self.current_file) > self.max_file_size:

self.current_file = self._get_current_filename()

def log(

self,

prompt: str,

response: str,

metadata: Optional[Dict[str, Any]] = None,

user_id: Optional[str] = None

) -> str:

"""Core logging method"""

log_entry = {

"timestamp": datetime.datetime.now().isoformat(),

"prompt_hash": hashlib.sha256(prompt.encode()).hexdigest(),

"prompt": self._redact_data(prompt),

"response": response,

"metadata": metadata or {},

"user_id": user_id or "anonymous"

}

try:

with open(self.current_file, "a") as f:

f.write(json.dumps(log_entry) + "\n")

self._rotate_file()

return log_entry["prompt_hash"]

except Exception as e:

# Fallback logging

print(f"Logging failed: {str(e)}")

return ""

@staticmethod

def log_async(func):

"""Decorator for asynchronous logging"""

@wraps(func)

async def wrapper(*args, **kwargs):

start_time = datetime.datetime.now()

result = await func(*args, **kwargs)

# Assuming function returns tuple (prompt, response)

prompt, response = result

logger = LLMLogger()

logger.log(

prompt=prompt,

response=response,

metadata={

"latency_ms": (datetime.datetime.now() - start_time).total_seconds() * 1000,

"function": func.__name__

}

)

return result

return wrapper

# Usage Example (Synchronous)

logger = LLMLogger(redact_keys=["password"])

logger.log(

prompt="What's our internal API key format?",

response="I cannot disclose that information.",

metadata={"model_version": "gpt-4-0125"},

user_id="user_123"

)

# Usage Example (Async Decorator)

@LLMLogger.log_async

async def generate_response(prompt: str) -> tuple:

# Simulate LLM call

response = await llm_api(prompt)

return prompt, response

import json

import datetime

from functools import wraps

import hashlib

import os

from typing import Optional, Dict, Any

class LLMLogger:

def __init__(

self,

log_dir: str = "llm_logs",

max_file_size: int = 1000000, # 1MB per file

redact_keys: list = ["ssn", "credit_card"]

):

os.makedirs(log_dir, exist_ok=True)

self.log_dir = log_dir

self.max_file_size = max_file_size

self.redact_keys = redact_keys

# Initialize current log file

self.current_file = self._get_current_filename()

def _redact_data(self, data: str) -> str:

"""Basic PII redaction"""

for key in self.redact_keys:

data = data.replace(key, "[REDACTED]")

return data

def _get_current_filename(self) -> str:

return os.path.join(

self.log_dir,

f"llm_log_{datetime.datetime.now().strftime('%Y%m%d_%H%M')}.ndjson"

)

def _rotate_file(self):

if os.path.getsize(self.current_file) > self.max_file_size:

self.current_file = self._get_current_filename()

def log(

self,

prompt: str,

response: str,

metadata: Optional[Dict[str, Any]] = None,

user_id: Optional[str] = None

) -> str:

"""Core logging method"""

log_entry = {

"timestamp": datetime.datetime.now().isoformat(),

"prompt_hash": hashlib.sha256(prompt.encode()).hexdigest(),

"prompt": self._redact_data(prompt),

"response": response,

"metadata": metadata or {},

"user_id": user_id or "anonymous"

}

try:

with open(self.current_file, "a") as f:

f.write(json.dumps(log_entry) + "\n")

self._rotate_file()

return log_entry["prompt_hash"]

except Exception as e:

# Fallback logging

print(f"Logging failed: {str(e)}")

return ""

@staticmethod

def log_async(func):

"""Decorator for asynchronous logging"""

@wraps(func)

async def wrapper(*args, **kwargs):

start_time = datetime.datetime.now()

result = await func(*args, **kwargs)

# Assuming function returns tuple (prompt, response)

prompt, response = result

logger = LLMLogger()

logger.log(

prompt=prompt,

response=response,

metadata={

"latency_ms": (datetime.datetime.now() - start_time).total_seconds() * 1000,

"function": func.__name__

}

)

return result

return wrapper

# Usage Example (Synchronous)

logger = LLMLogger(redact_keys=["password"])

logger.log(

prompt="What's our internal API key format?",

response="I cannot disclose that information.",

metadata={"model_version": "gpt-4-0125"},

user_id="user_123"

)

# Usage Example (Async Decorator)

@LLMLogger.log_async

async def generate_response(prompt: str) -> tuple:

# Simulate LLM call

response = await llm_api(prompt)

return prompt, response

Advanced Features in Action

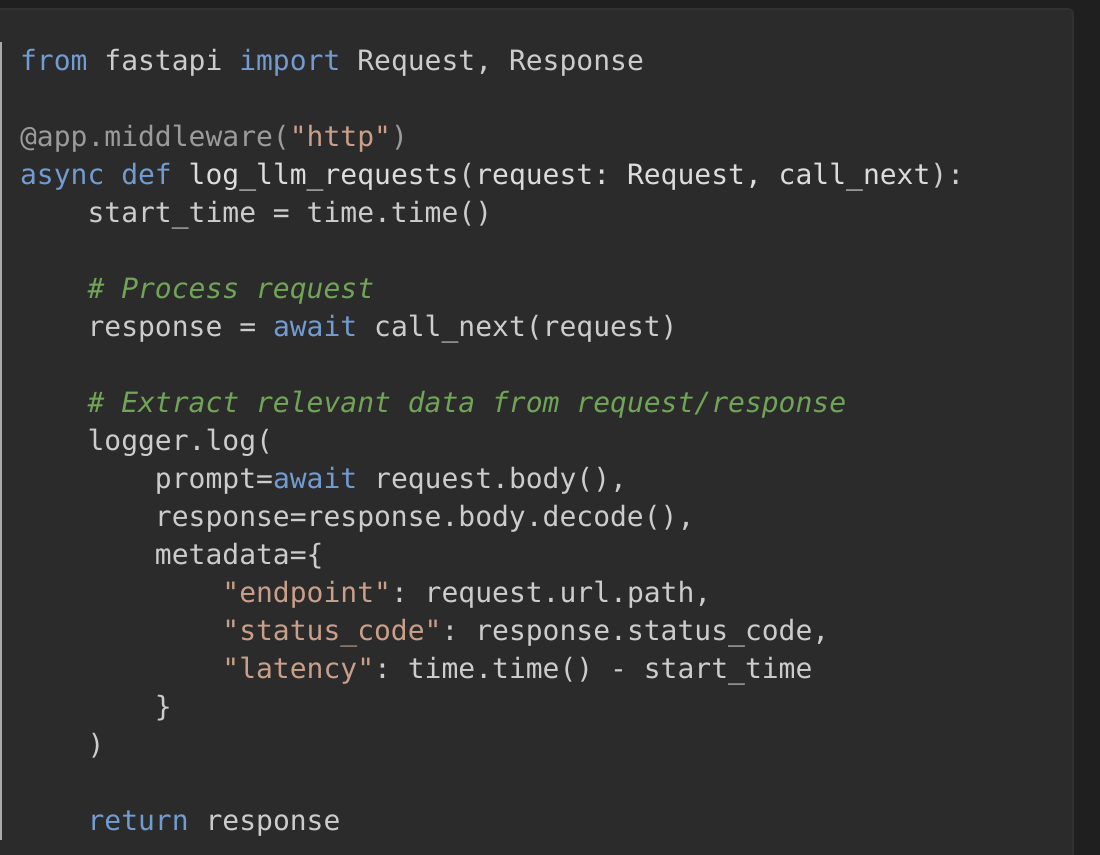

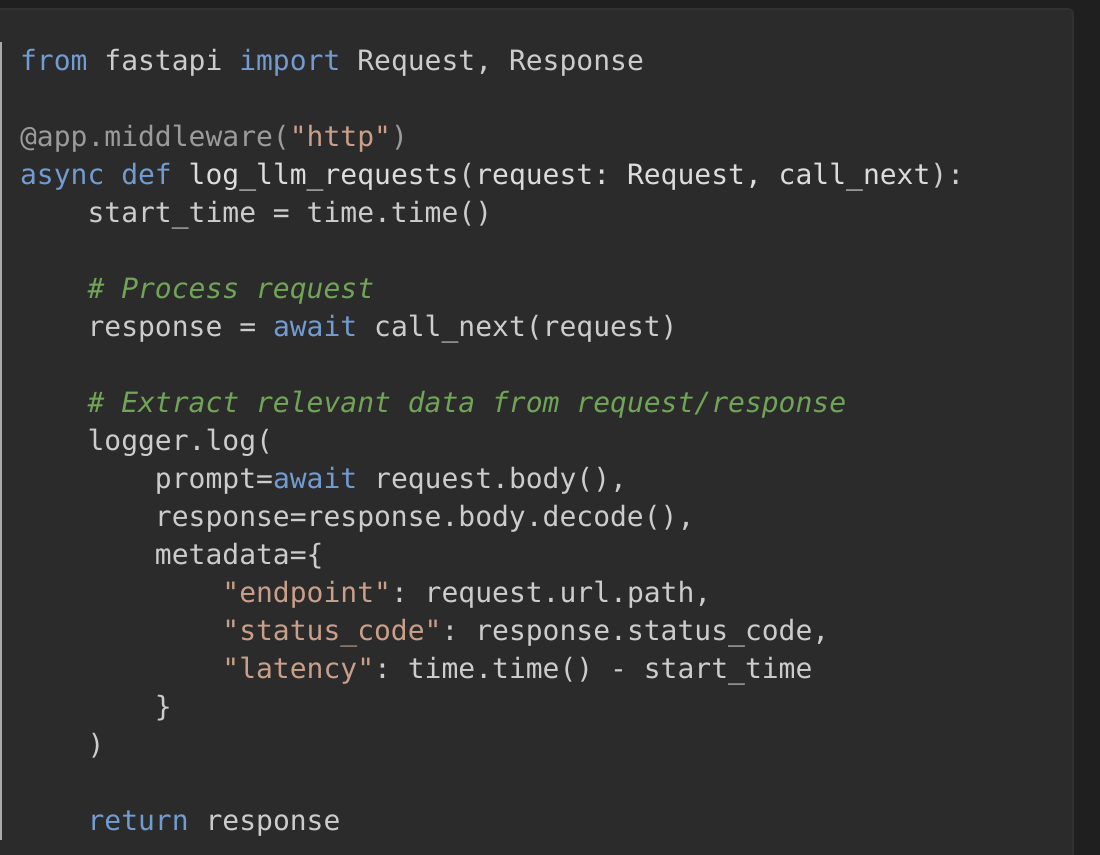

- FastAPI Middleware Integration

from fastapi import Request, Response

@app.middleware("http")

async def log_llm_requests(request: Request, call_next):

start_time = time.time()

# Process request

response = await call_next(request)

# Extract relevant data from request/response

logger.log(

prompt=await request.body(),

response=response.body.decode(),

metadata={

"endpoint": request.url.path,

"status_code": response.status_code,

"latency": time.time() - start_time

}

)

return response- Automated Alerting

class MonitoringService:

def __init__(self):

self.logger = LLMLogger()

def check_response_quality(self):

recent_logs = self.load_last_hour_logs()

# Detect potential hallucinations

uncertain_responses = [

log for log in recent_logs

if any(phrase in log["response"].lower()

for phrase in ["i'm not sure", "as an ai"])

]

if len(uncertain_responses) > 10:

alert_team(f"High uncertainty rate: {len(uncertain_responses)}/hour")

from fastapi import Request, Response

@app.middleware("http")

async def log_llm_requests(request: Request, call_next):

start_time = time.time()

# Process request

response = await call_next(request)

# Extract relevant data from request/response

logger.log(

prompt=await request.body(),

response=response.body.decode(),

metadata={

"endpoint": request.url.path,

"status_code": response.status_code,

"latency": time.time() - start_time

}

)

return responseclass MonitoringService:

def __init__(self):

self.logger = LLMLogger()

def check_response_quality(self):

recent_logs = self.load_last_hour_logs()

# Detect potential hallucinations

uncertain_responses = [

log for log in recent_logs

if any(phrase in log["response"].lower()

for phrase in ["i'm not sure", "as an ai"])

]

if len(uncertain_responses) > 10:

alert_team(f"High uncertainty rate: {len(uncertain_responses)}/hour")

Best Practices

- Data Anonymization

from presidio_analyzer import AnalyzerEngine

analyzer = AnalyzerEngine()

def advanced_redaction(text: str) -> str:

results = analyzer.analyze(text=text, language="en")

for result in results:

text = text.replace(text[result.start:result.end], "[REDACTED]")

return text

- Secure Storage

- Encrypt logs at rest (AWS KMS/GCP Cloud KMS)

- Implement role-based access control (RBAC)

- Use immutable storage buckets for audit logs

- Retention Policies

def apply_retention_policy(log_dir: str = "llm_logs", days=30):

cutoff = time.time() - days * 86400

for f in os.listdir(log_dir):

path = os.path.join(log_dir,f)

if os.stat(path).st_mtime 1% of responses | Real-time + Daily |

| Average response length | ±20% from baseline | Hourly |

| PII leakage | Any occurrence | Real-time |

| API latency | >5s p95 | 5-minute rolling |

from presidio_analyzer import AnalyzerEngine

analyzer = AnalyzerEngine()

def advanced_redaction(text: str) -> str:

results = analyzer.analyze(text=text, language="en")

for result in results:

text = text.replace(text[result.start:result.end], "[REDACTED]")

return text

def apply_retention_policy(log_dir: str = "llm_logs", days=30):

cutoff = time.time() - days * 86400

for f in os.listdir(log_dir):

path = os.path.join(log_dir,f)

if os.stat(path).st_mtime 1% of responses | Real-time + Daily |

| Average response length | ±20% from baseline | Hourly |

| PII leakage | Any occurrence | Real-time |

| API latency | >5s p95 | 5-minute rolling |

Conclusion

Effective LLM logging requires both technical implementation and organizational policies:

Technical Implementation

- Use the provided

LLMLoggerclass as foundation - Add environment-specific security controls

- Integrate with existing monitoring stacks

- Use the provided

Organizational Policies

- Define retention periods (30-90 days recommended)

- Establish access approval workflows

- Conduct regular log audits

For enterprise deployments consider combining custom logging with commercial tools like DataDog's AI Monitoring or Splunk's AI Observability suite.

Final Recommendation: Start with the custom Python implementation above for full control over sensitive data handling while evaluating commercial solutions for advanced analytics needs.

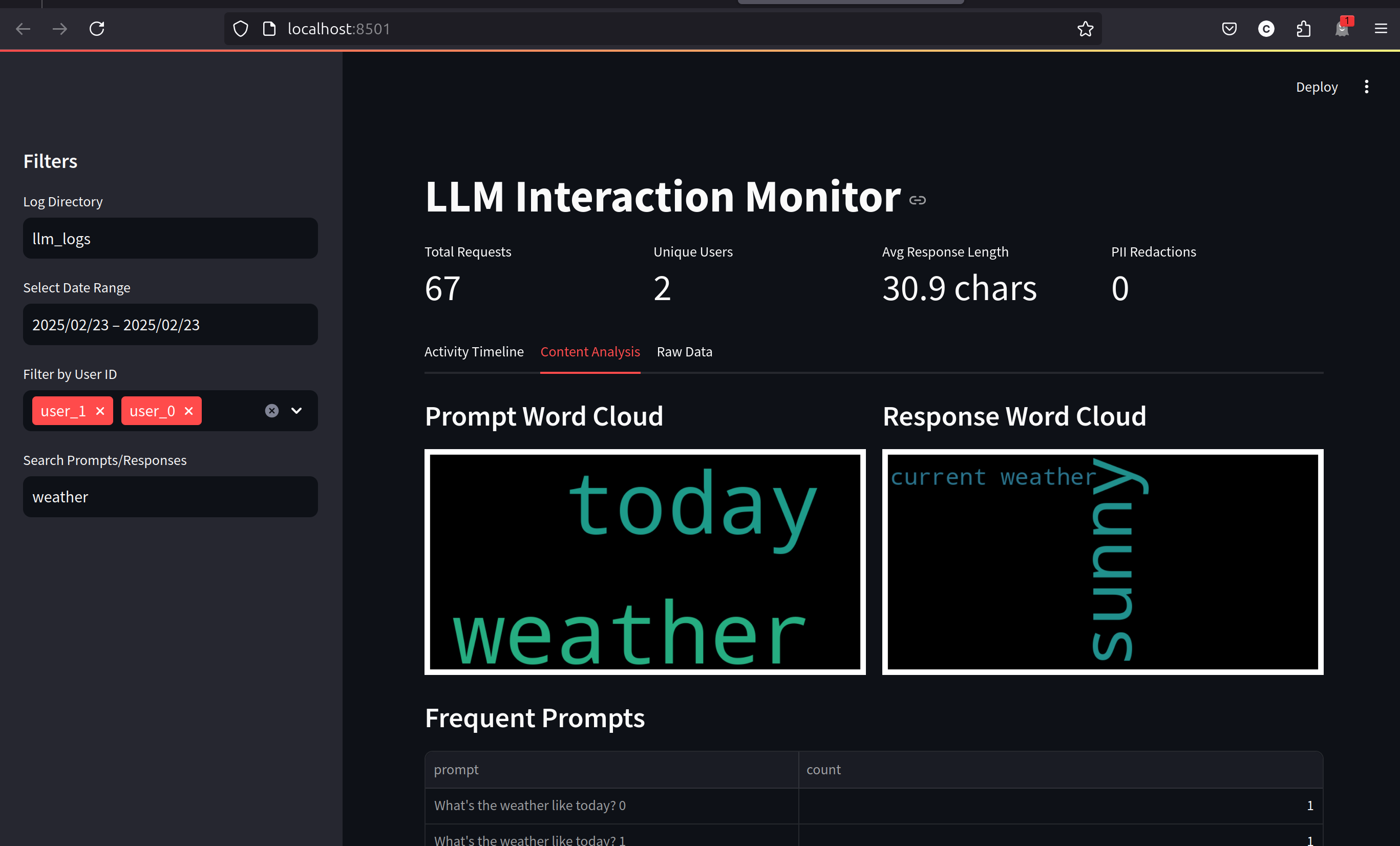

We can also visualize our logs in streamlit as below

Happy Coding

Jesus Saves

No tags associated with this blog post.

Recent Posts

NLP Analysis

- Sentiment: negative

- Subjectivity: negative

- Emotions: joy

- Probability: {'anger': 7.70042910780245e-179, 'disgust': 8.413050749631919e-216, 'fear': 1.3026307644249056e-199, 'joy': 1.0, 'neutral': 0.0, 'sadness': 5.685829427562536e-207, 'shame': 1.557545565521416e-297, 'surprise': 9.800832040830775e-241}